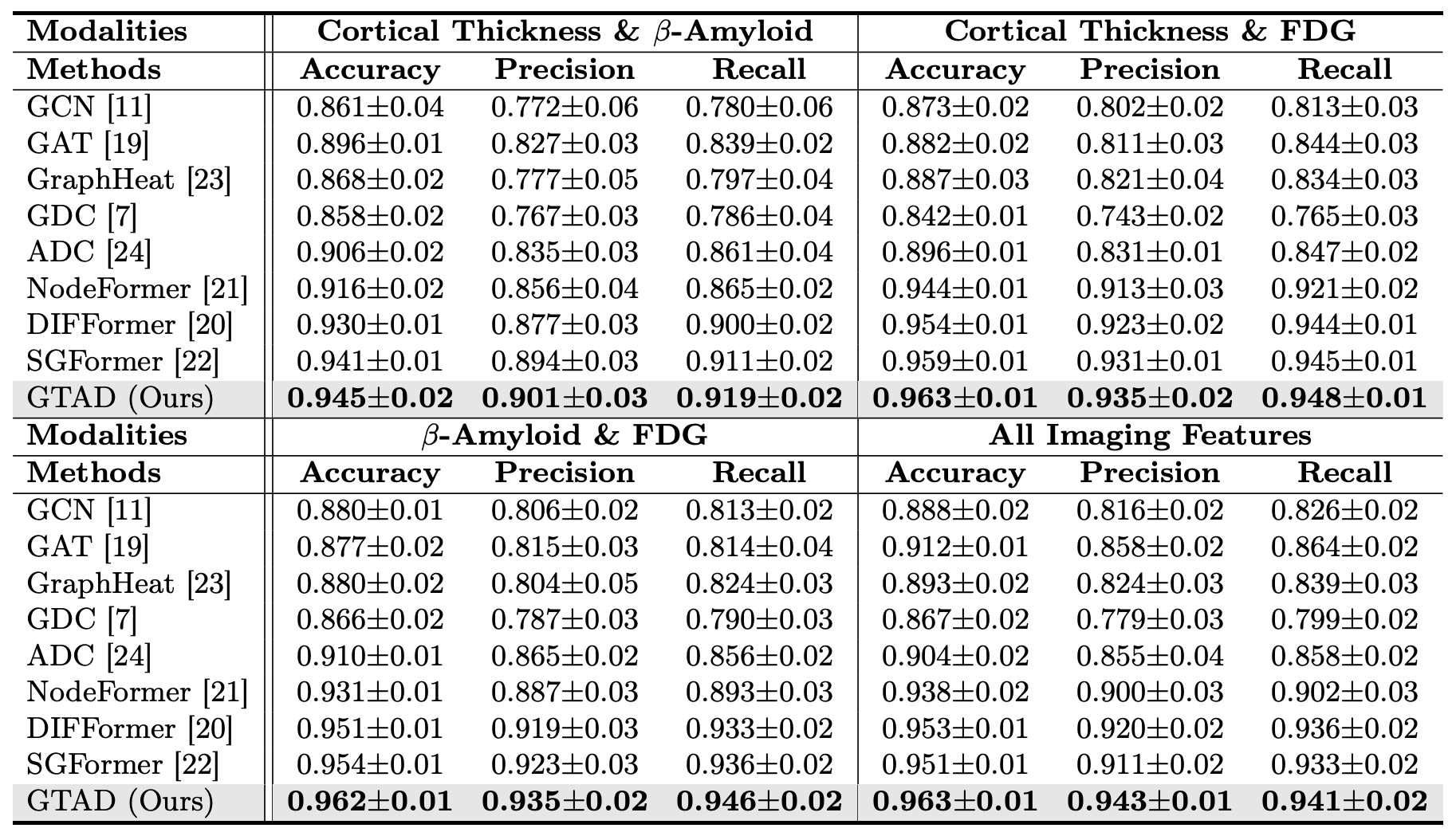

Table: Preclinical AD classification performance (CN/SMC/EMCI) on ADNI data.

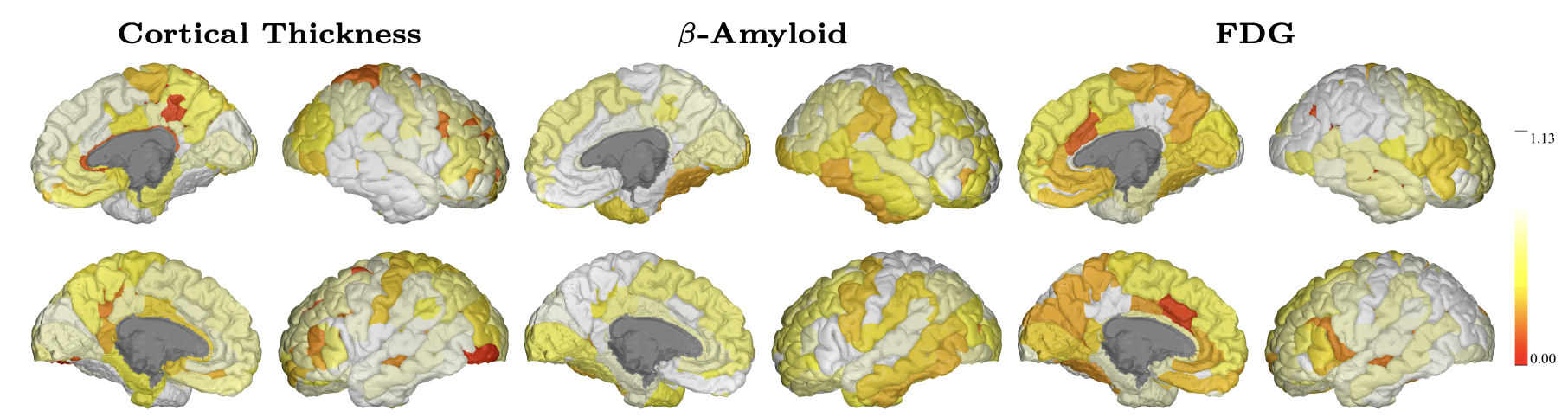

Figure: Visualization of learned scales on the cortical regions of left (top) and right (bottom) hemispheres.

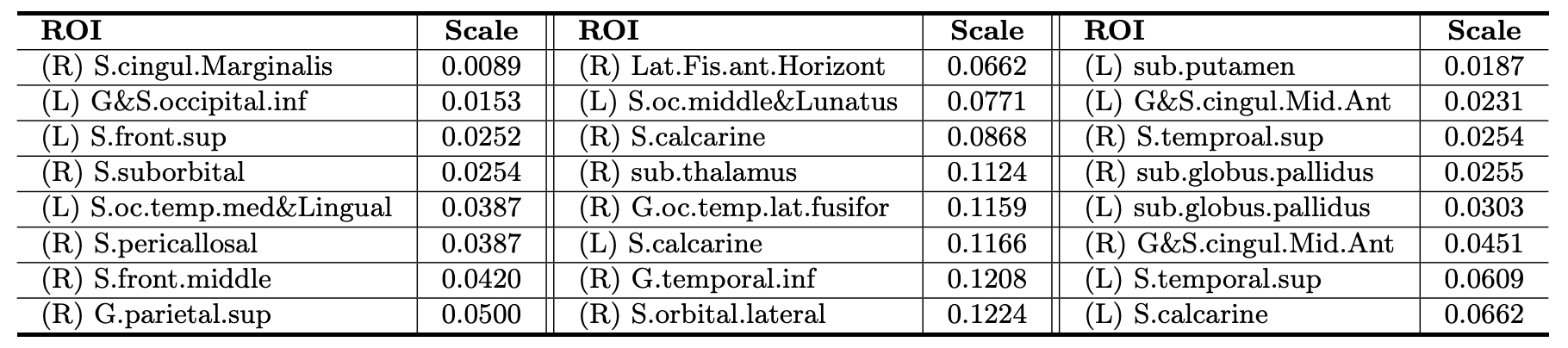

Table: 8 Localized ROIs with the smallest trained scales for classification. (L) and (R) denote left and right hemisphere, respectively.

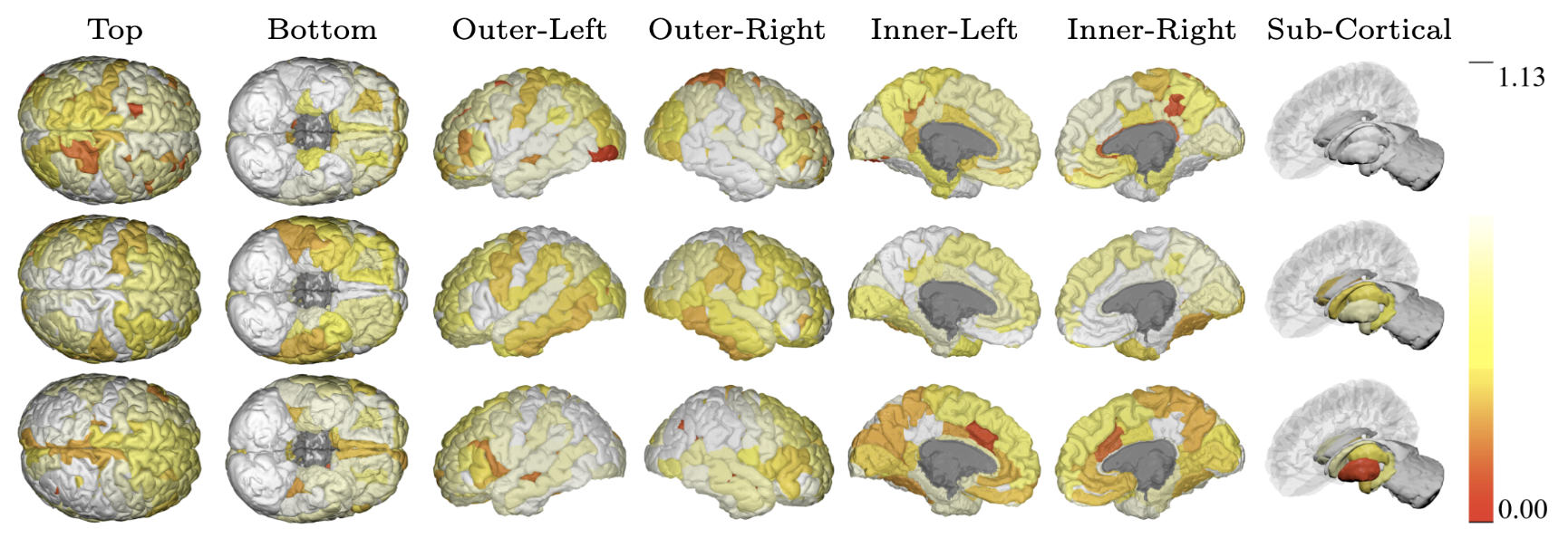

Figure: Visualization of learned scales on the cortical regions of a brain using three biomarkers such as cortical thickness (top), β-Amyloid (middle) and FDG (bottom).

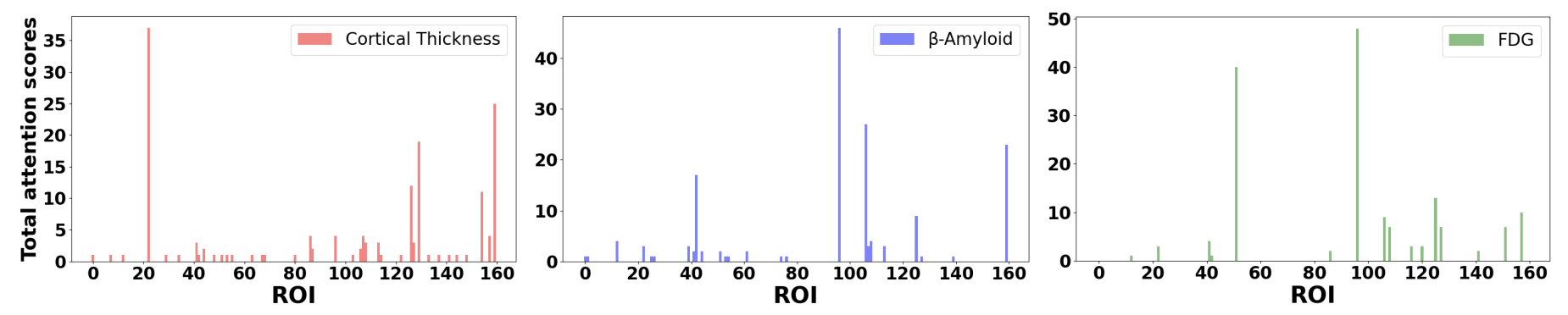

Figure: Distribution of attention scores across all brain regions with cortical thickness (left), β-Amyloid (center) and FDG (right).

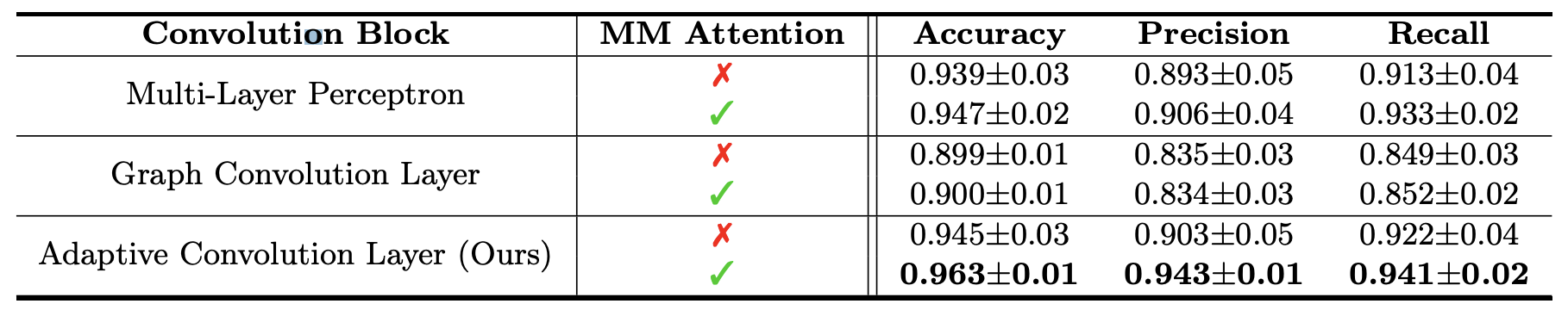

Table: Corresponding ROIs with the 5 highest attention scores for classification. Importance Rate (IR) indicates how many ROIs pay attention. (L) and (R) denote left and right hemisphere, respectively.

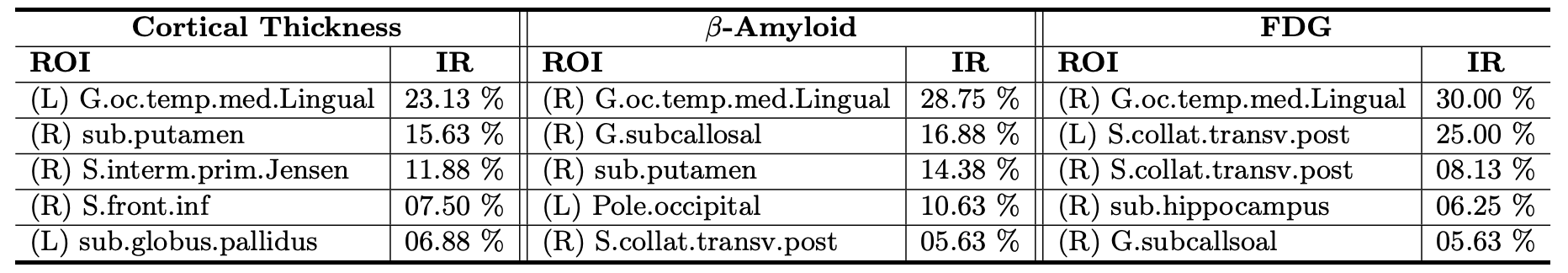

Table: Performance comparisons of different blocks. For attention block, our multimodal (MM) attention and existing position-wise attention are compared.

In this work, we proposed a novel end-to-end framework GTAD to dynamically define node-centric ranges per imaging modality via diffusion kernel, guided by a subsequent transformer. Our framework captures local characteristics on graphs by flexibly optimizing node-wise scales separately on imaging modalities, and obtains a global representation by employing multi-modal self-attention, which guides the model to better prediction. Leveraging multiple imaging measures, GTAD demonstrates superiority as evidenced by improved performance in preclinical AD classification, and the results identifies disease-specific variation through AD-specific key ROIs in the brain.

@inproceedings{sim2024multi,

title={Multi-modal Graph Neural Network with Transformer-Guided Adaptive Diffusion for Preclinical Alzheimer Classification},

author={Sim, Jaeyoon and Lee, Minjae and Wu, Guorong and Kim, Won Hwa},

booktitle={International Conference on Medical Image Computing and Computer-Assisted Intervention},

pages={511--521},

year={2024},

organization={Springer}

}